Here comes a new platform feature on ALGOGENE to directly work with Jupyter Notebook!

What is Jupyter Notebook?

As its name, Jupyter Notebook is an interactive development environment which allows you to create and share documents that contain live code, equations, narrative text, paragraph, figures, links, etc.

You can perform the following tasks with a Jupyter Notebook:

- pre-backtest analysis;

- data transformation;

- numerical simulation;

- statistical modeling;

- data visualization;

- machine learning;

- many more ...

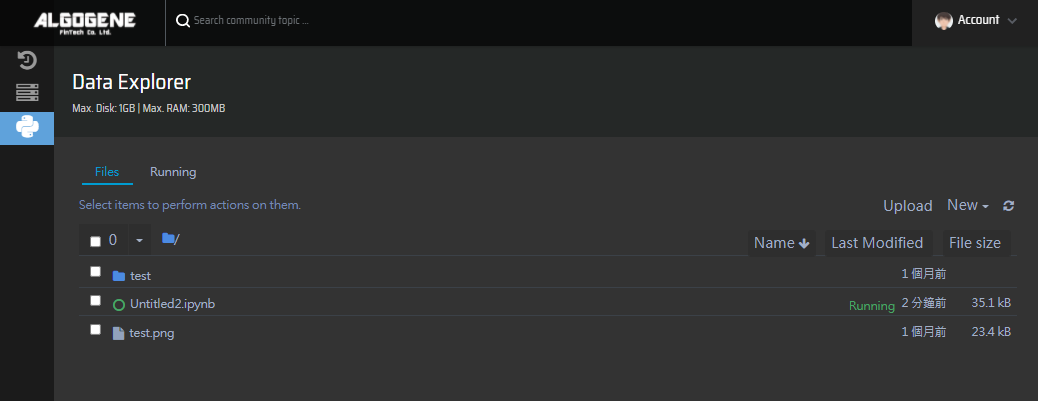

What is Jupyter Dashboard?

In addition to displaying/editing/running notebook documents, the Jupyter Notebook App also has a control panel (called Jupyter Dashboard). It shows local files and allows to open notebook documents and shut down their kernels. It functions like a usual file explorer in a desktop computer for document management.

What is Notebook Kernel?

A notebook kernel is a computational engine that executes the code contained in a Notebook document. When you open a Notebook document, ALGOGENE will automatically assign a partition of computer resource and launch the associated kernel. The kernel performs the computation and produces the results when a notebook is executed. Depending on the type of computations, a kernel process might be shutted down if it consumes significant CPU and RAM beyond the assigned limit.

How can I get start?

After login ALGOGENE platform,

- go to [My History] > [Jupyter Notebook]

- you will get to Jupyter Dashboard after kernel is initialized

- you can then create a new notebook document

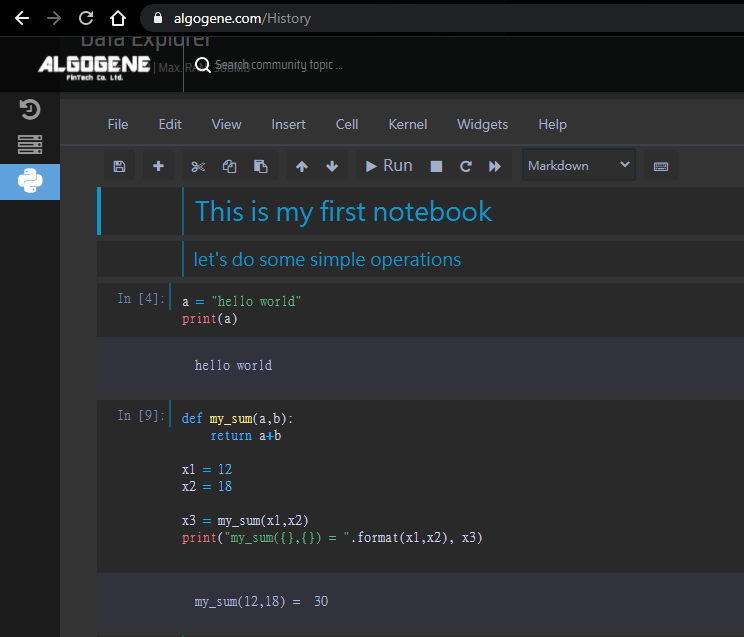

Example 1: basic operation

In a notebook document, there are 2 typical cell elements.

- markdown cell - to display text

- code cell - to run live code

We can firstly insert markdown cells as a document heading.

We can also create code cells to run some simple operations.

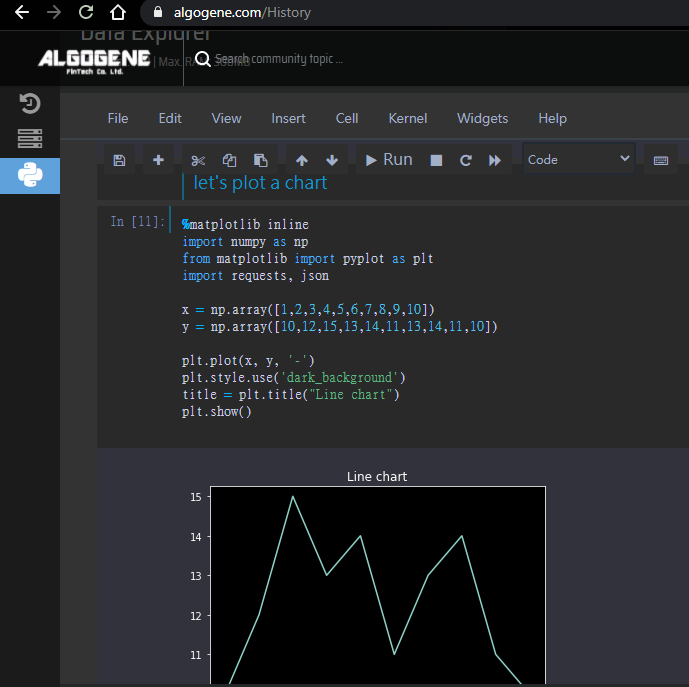

Example 2: charting

Let's continous to create a new code cell. Now, we make up a random dataset and plot a simple x-y chart.

To use "matplotlib" in Jupyter, we need to add %matplotlib inline at the beginning.

1 2 3 4 5 6 7 8 9 10 11 | %matplotlib inline import numpy as np from matplotlib import pyplot as plt x = np.array([1,2,3,4,5,6,7,8,9,10]) y = np.array([10,12,15,13,14,11,13,14,11,10]) plt.plot(x,y,'-') plt.style.use('dark_background') title = plt.title('Line chart') plt.show() |

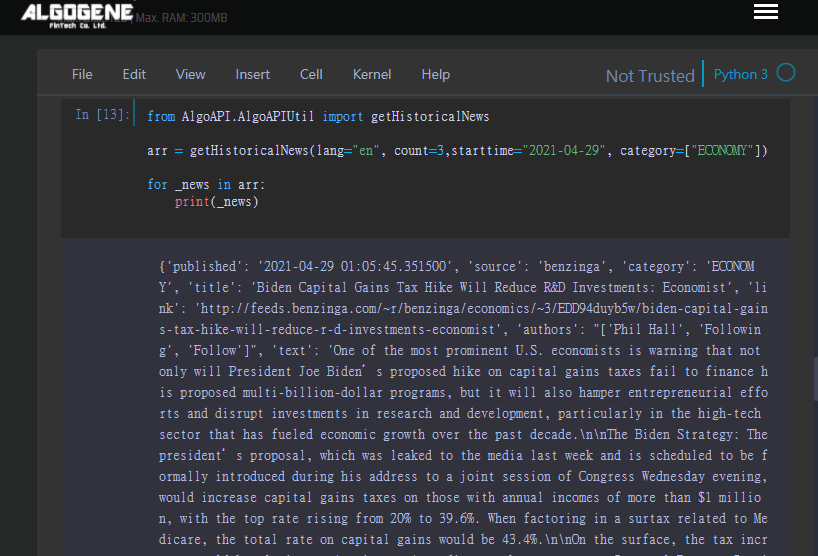

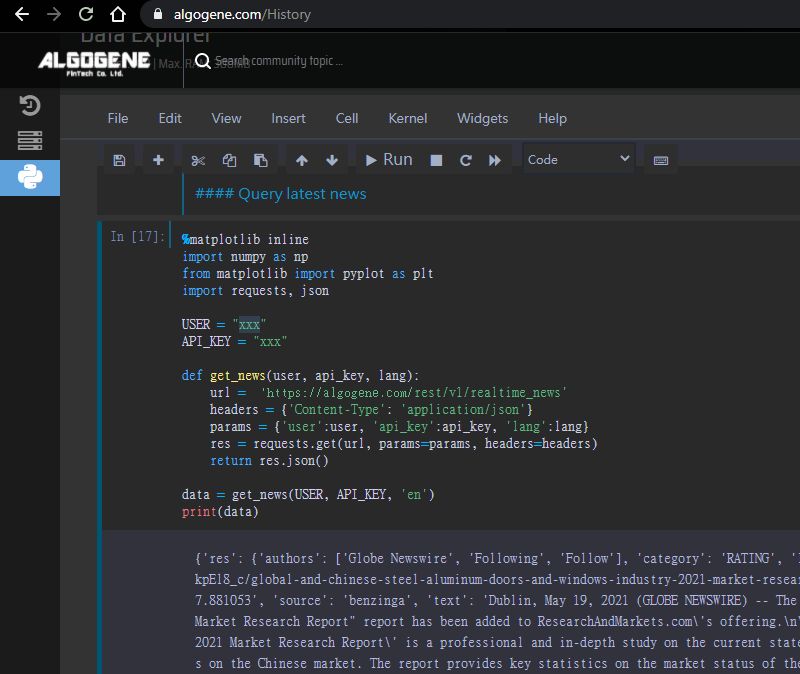

Example 3: data query

Now, let us try query some real-time news from the server.

Referring to the document of ALGOGENE REST API, we need to firstly get our API key which can be obtained from [Settings] > [User Profile]. For more details, please refer to this post.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | %matplotlib inline import numpy as np from matplotlib import pyplot as plt import requests, json USER = "xxxx" API_KEY = "xxxx" def get_news(user, api_key, lang): url = "https://algogene.com/rest/v1/realtime_news" headers = {'Content-Type':'application/json'} params = {'user':user, 'api_key':api_key, 'lang':lang} res = requests.get(url, params=params, headers=headers) return res.json() data = get_news(USER, API_KEY, 'en') print(data) |

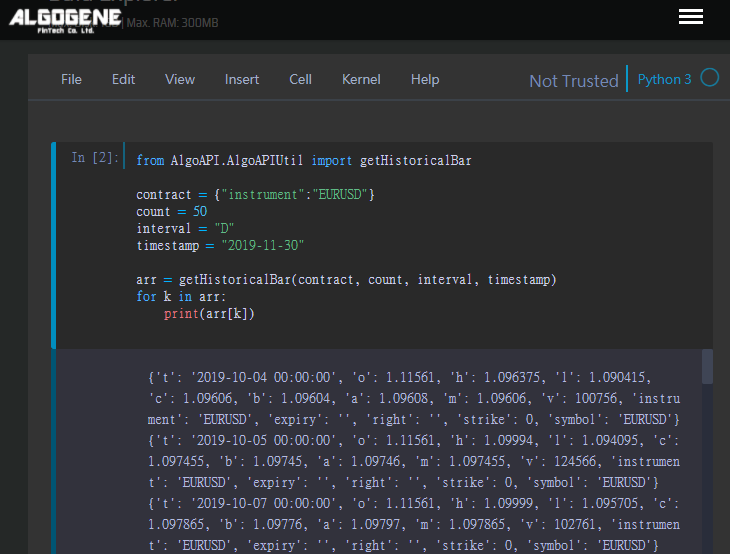

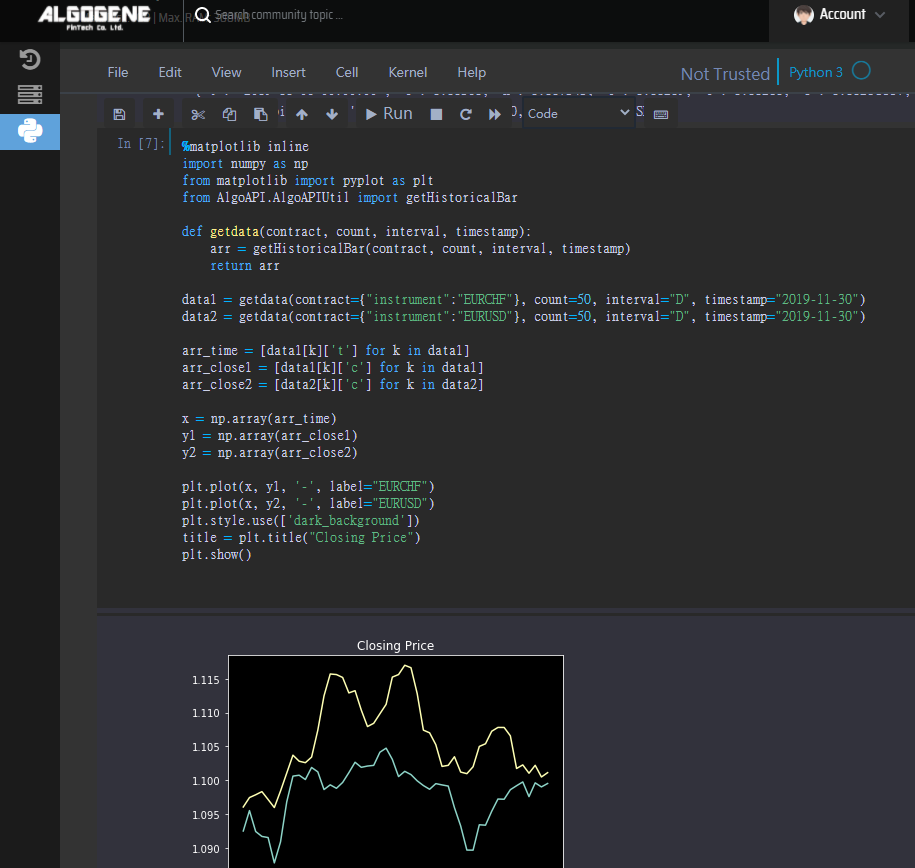

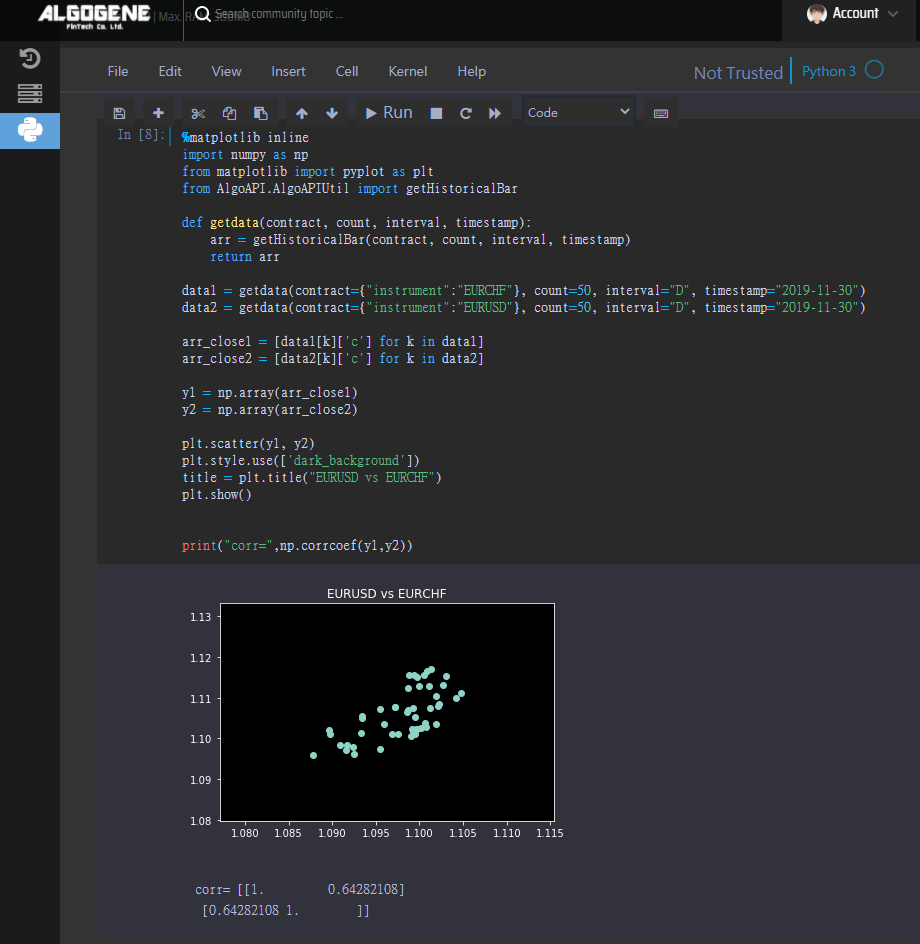

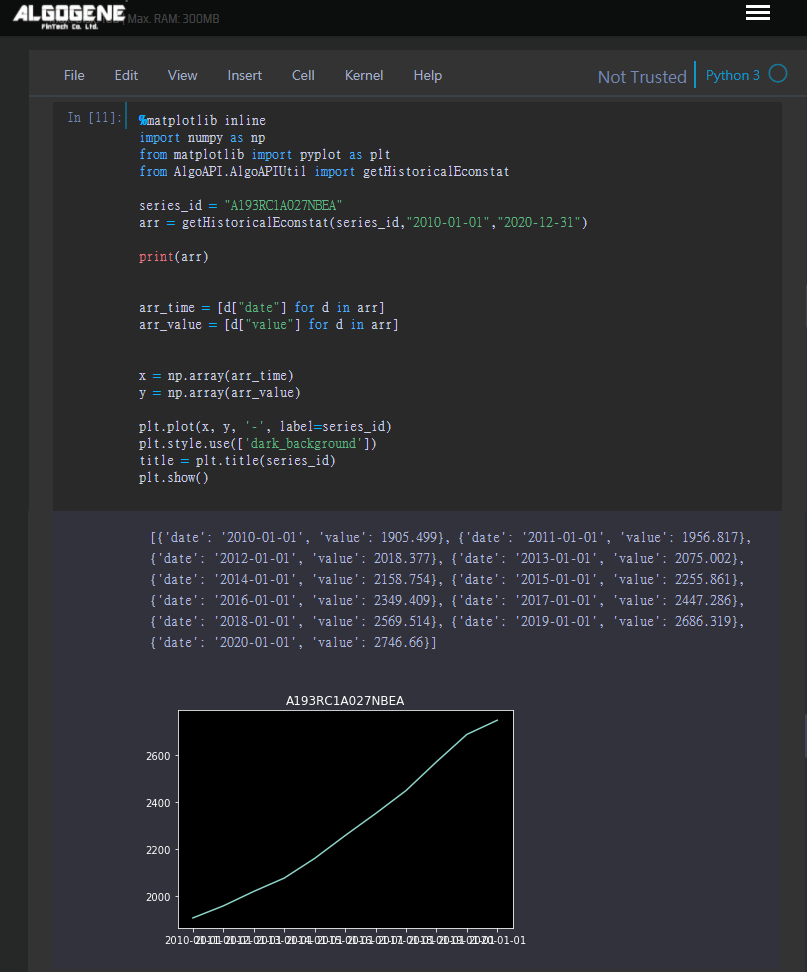

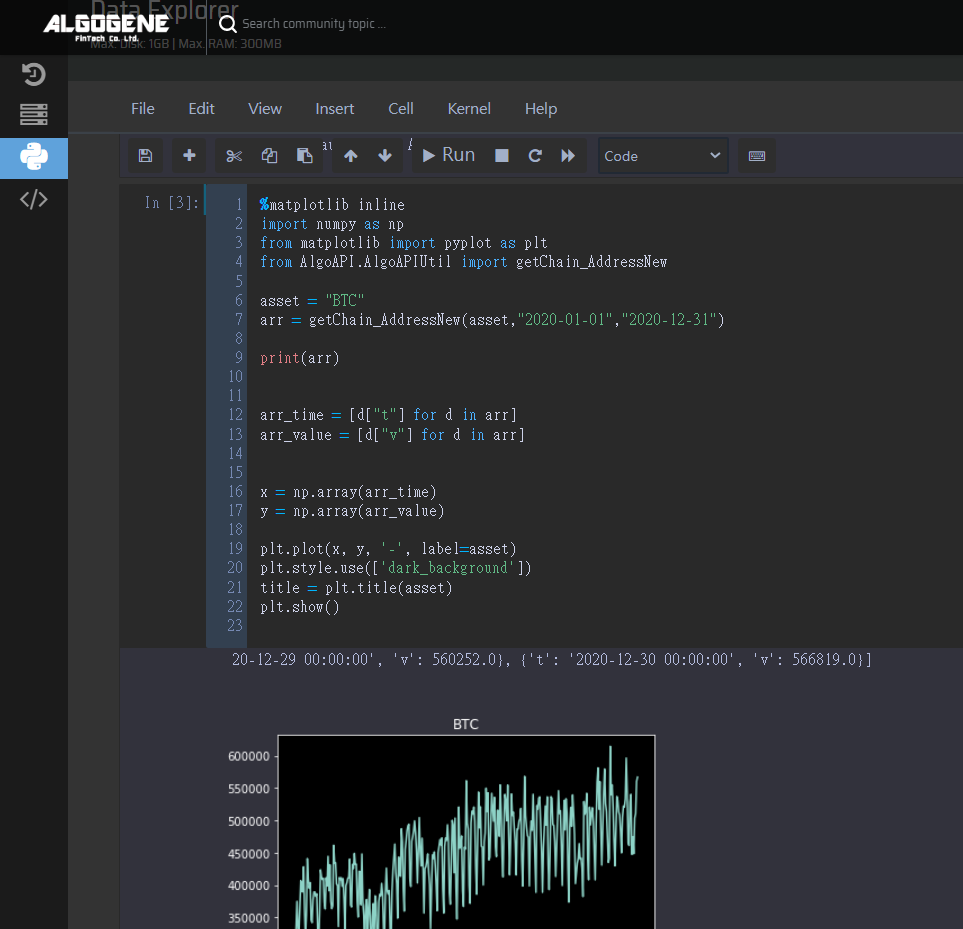

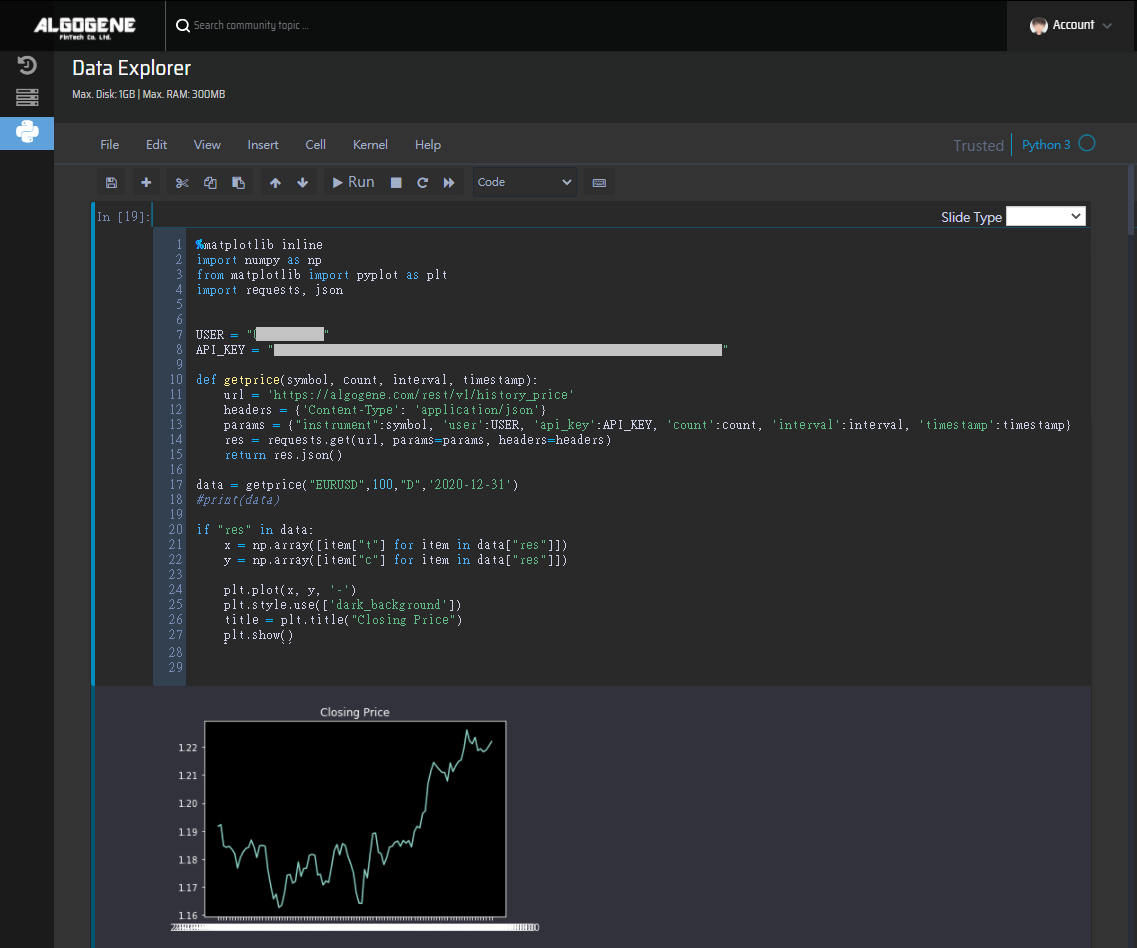

Example 4: visualize market data

This example shows you how to query historial closing price of EURUSD, and then display the data in a line chart.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | %matplotlib inline import numpy as np from matplotlib import pyplot as plt import requests, json USER = "xxxx" API_KEY = "xxxx" def getprice(symbol, count, interval, timestamp): url = 'https://algogene.com/rest/v1/history_price' headers = {'Content-Type': 'application/json'} params = {"instrument":symbol, 'user':USER, 'api_key':API_KEY, 'count':count, 'interval':interval, 'timestamp':timestamp} res = requests.get(url, params=params, headers=headers) return res.json() data = getprice("EURUSD",100,"D",'2020-12-31') #print(data) if "res" in data: x = np.array([item["t"] for item in data["res"]]) y = np.array([item["c"] for item in data["res"]]) plt.plot(x, y, '-') plt.style.use(['dark_background']) title = plt.title("Closing Price") plt.show() |

Now, you learnt what Jupyter Notebook can do. It is time for you to explore the data and build your trading model!